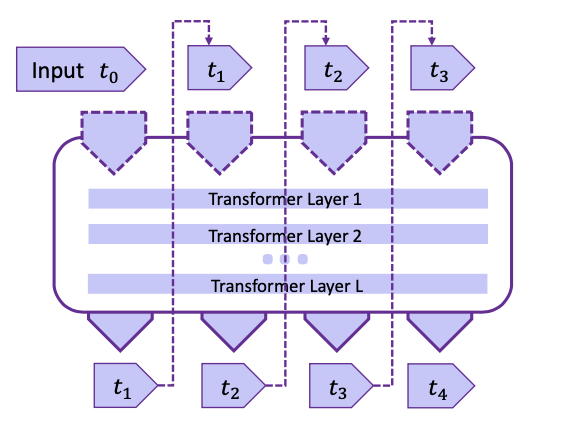

Auto-regressive decoding is fundamental in LLM inference for generating coherent and con- textually appropriate text. It ensures that each token generated is conditioned on a comprehensive understanding of all previously generated content, allowing LLMs to produce highly relevant and fluent text sequences.

Here, represents the probability of the next token 𝑦 given the current sequence , and denotes the concatenation operation. The function is used to select the most probable next token at each step.