Motivation

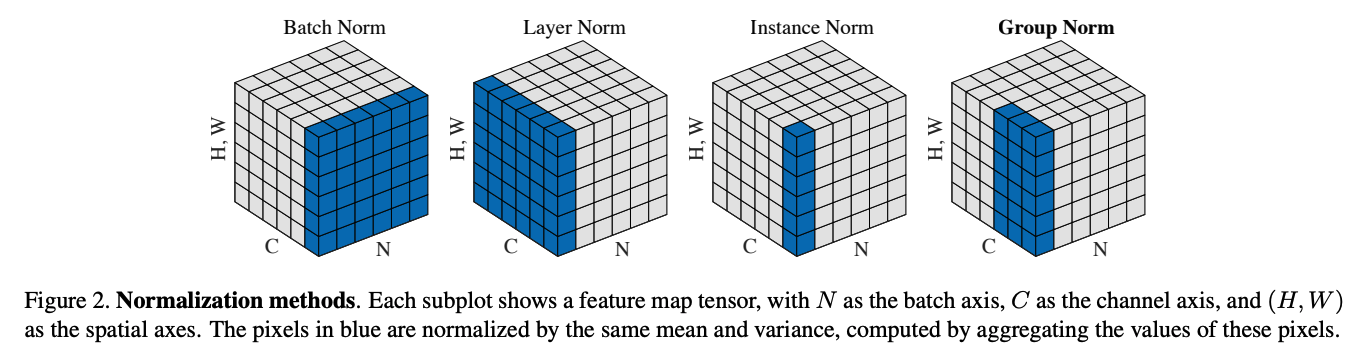

LayerNorm is often preferred in models where sequences are involved, sequence length is variable within the batch size. Batch Norm, on the other hand, works well in many standard deep learning models with large, fixed-size sequence length (like image ) where its ability to stabilize training through batch-wide statistics is beneficial. LayerNorm is widely used in many architecture that often process sequence data like RNN, Transformer.

Layer Norm

Layer normalization is a technique used to normalize the inputs across the features in a layer. Given an input vector of length , layer normalization is performed as follows:

- Compute the Mean:

- Compute the Variance:

- Normalize: Each feature in the input is normalized by subtracting the mean and dividing by the standard deviation: Here, is a small constant added for numerical stability.

- Apply Scaling and Shifting: Finally, the normalized values are scaled and shifted using learned parameters and : where and are trainable parameters that allow the network to maintain the representational power.